RAPOSA-NG: Difference between revisions

(→Power) |

|||

| (5 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

[[Image: | [[Image:RaposaNG description.png|600px]] | ||

== Description == | |||

IdMind | Following the success of RAPOSA, the IdMind company developed a commercial version of RAPOSA, improving it in various ways. Notably, the rigid chassis of RAPOSA, which eventually ends up being plastically deformed by frequent shocks, was replaced by semi-flexible structure, capable of absorbing non-elastical shocks, while significantly lighter than the original RAPOSA. | ||

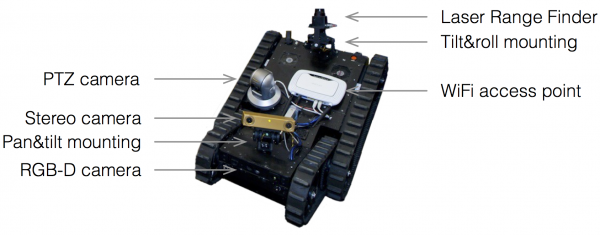

ISR acquired a barebones version of this robot, called RAPOSA-NG, and equipped it with a different set of sensors, following lessons learnt from previous research with RAPOSA. In particular, it is equipped with: | |||

* a stereo camera unit (PointGrey Bumblebee2) on a pan-and-tilt motorized mounting; | |||

* a Laser-Range Finder (LRF) sensor on a tilt-and-roll motorized mounting; | |||

* an pan-tilt-and-zoom (PTZ) IP camera; | |||

* an Inertial Measurement Unit (IMU). | |||

This equipment was chosen not only to fit better our research interests, but also to aim at the RoboCup Robot Rescue competition. | |||

The stereo camera is primarily used jointly with an Head-Mounted Display (HMD) wear by the operator: the stereo images are displayed on the HMD, thus providing depth perception to the operator, while the stereo camera attitude is controlled by the head tracker built-in the HMD. | |||

The LRF is being used in one of the following two modes: 2D and 3D mapping. In 2D mapping we assume that the environment is made of vertical walls. However, since we cannot assume horizontal ground, we use a tilt-and-roll motorized mounting to automatically compensate for the robot attitude, such that the LRF scanning plane remains horizontal. An internal IMU measures the attitude of the robot body and controls the mounting servos such that the LRF scanning plane remains horizontal. | |||

The IP camera is used for detail inspection: its GUI allows the operator to orient the camera towards a target area and zoom in into a small area of the environment. This is particularly relevant for remote inspection tasks in USAR. The IMU is used both to provide the remote operator with reading of the attitude of the robot, and for automatic localization and mapping of the robot. | |||

Further info can be found in the book chapter [http://link.springer.com/chapter/10.1007/978-3-319-05431-5_12 Two Faces of Human–Robot Interaction: Field and Service Robots (Rodrigo Ventura)], from New Trends in Medical and Service Robots | |||

Mechanisms and Machine Science Volume 20, pp 177-192, SprinGer, 2014. | |||

And check out our [https://www.facebook.com/socrob.rescue Facebook page]! | |||

== | == Team == | ||

* Rodrigo Ventura (coordinator) | |||

* Filipe Jesus | |||

* João Mendes | |||

* João O'Neill | |||

== Videos == | |||

<html><iframe width="560" height="315" src="//www.youtube.com/embed/edXl8UNH-UE" frameborder="0" allowfullscreen></iframe></html> | |||

<html><iframe width="560" height="315" src="//www.youtube.com/embed/XXXmA3iVKL0" frameborder="0" allowfullscreen></iframe></html> | |||

== | |||

= | |||

Latest revision as of 15:48, 18 November 2014

Description

Following the success of RAPOSA, the IdMind company developed a commercial version of RAPOSA, improving it in various ways. Notably, the rigid chassis of RAPOSA, which eventually ends up being plastically deformed by frequent shocks, was replaced by semi-flexible structure, capable of absorbing non-elastical shocks, while significantly lighter than the original RAPOSA.

ISR acquired a barebones version of this robot, called RAPOSA-NG, and equipped it with a different set of sensors, following lessons learnt from previous research with RAPOSA. In particular, it is equipped with:

- a stereo camera unit (PointGrey Bumblebee2) on a pan-and-tilt motorized mounting;

- a Laser-Range Finder (LRF) sensor on a tilt-and-roll motorized mounting;

- an pan-tilt-and-zoom (PTZ) IP camera;

- an Inertial Measurement Unit (IMU).

This equipment was chosen not only to fit better our research interests, but also to aim at the RoboCup Robot Rescue competition. The stereo camera is primarily used jointly with an Head-Mounted Display (HMD) wear by the operator: the stereo images are displayed on the HMD, thus providing depth perception to the operator, while the stereo camera attitude is controlled by the head tracker built-in the HMD. The LRF is being used in one of the following two modes: 2D and 3D mapping. In 2D mapping we assume that the environment is made of vertical walls. However, since we cannot assume horizontal ground, we use a tilt-and-roll motorized mounting to automatically compensate for the robot attitude, such that the LRF scanning plane remains horizontal. An internal IMU measures the attitude of the robot body and controls the mounting servos such that the LRF scanning plane remains horizontal. The IP camera is used for detail inspection: its GUI allows the operator to orient the camera towards a target area and zoom in into a small area of the environment. This is particularly relevant for remote inspection tasks in USAR. The IMU is used both to provide the remote operator with reading of the attitude of the robot, and for automatic localization and mapping of the robot.

Further info can be found in the book chapter Two Faces of Human–Robot Interaction: Field and Service Robots (Rodrigo Ventura), from New Trends in Medical and Service Robots Mechanisms and Machine Science Volume 20, pp 177-192, SprinGer, 2014.

And check out our Facebook page!

Team

- Rodrigo Ventura (coordinator)

- Filipe Jesus

- João Mendes

- João O'Neill

Videos