Cooperative Active Perception

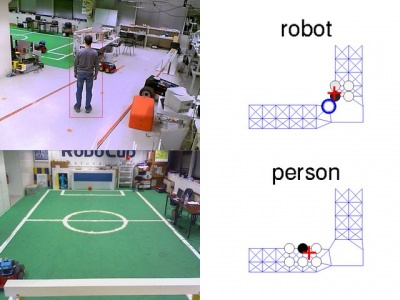

One of the recent research topics at the Intelligent Systems Lab is cooperative active perception. In our context, cooperative perception refers to the fusion of sensory information between fixed surveillance cameras and robots, with as goal maximizing the amount and quality of perceptual information available to the system. This information can be used by a robot to choose its actions, as well as providing a global picture for monitoring the system. In general, incorporating information from spatially distributed sensors will raise the level of situational awareness.

Active perception means that an agent considers the effects of its actions on its sensors, and in particular it tries to improve their performance. This can mean selecting sensory actions, for instance pointing a pan-and-tilt camera or choosing to execute an expensive vision algorithm; or to influence a robot’s path planning, e.g., given two routes to get to a desired location, take the more informative one. Performance can be measured by trading off the costs of executing actions with how much we improve the quality of the information available to the system, and should be derived from the system’s task. Combining the two concepts, cooperative active perception is the problem of active perception involving multiple sensors and multiple cooperating decision makers.

In general, we consider decision-theoretic approaches to cooperative active perception. We propose to use Partially ObservableMarkov Decision Processes (POMDPs) as a framework for active cooperative perception. POMDPs provide an elegant way to model the interaction of an active sensor with its environment. Based on prior knowledge of the sensor’s model and the environment dynamics, we can compute policies that tell the active sensor how to act, based on the observations it receives. As we are essentially dealing with multiple decision makers, it could also be beneficial to consider modeling (a subset of) sensors as a decentralized POMDP (Dec-POMDP). In a cooperative perception framework, an important task encoded by the (Dec-)POMDP could be to reduce the uncertainty in its view of the environment as much as possible. Entropy can be used as a suitable measure for uncertainty. However, using a POMDP solution, we can tackle more elaborate scenarios, for instance in which we prioritize the tracking of certain objects. In particular, POMDPs inherently trade off task completion and information gathering.