AuReRo: Augmented Reality Robotics: Difference between revisions

No edit summary |

No edit summary |

||

| (19 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

[[Image:AuReRo logo.png|400px]] | |||

'''Website: http://aurero.isr.ist.utl.pt''' | '''Website: http://aurero.isr.ist.utl.pt''' | ||

== Funding == | |||

[[Image:FCT logo.jpg|200px]] | |||

* FRARIM: Human-robot interaction with field robots using augmented reality and interactive mapping (PTDC/EIA-CCO/113257/2009) | |||

* LARSyS Plurianual Budget (PEst-OE/EEI/LA0009/2011) | |||

== Executive Summary == | == Executive Summary == | ||

[[Image: | [[Image:RAPOSA-NG-GIPS-1.jpg|250px|thumb|RAPOSA-NG platform]] | ||

Field robotics is the use of sturdy robots in unstructured environments. One important example of such a scenario is in Search And Rescue (SAR) operations to seek out victims of catastrophic events in urban environments. While advances in this domain have the potential to save human lives, many challenging problems still hinder the deployment of SAR robots in real situations. This project tackles one such crucial issue: effective real time mapping. To address this problem, we adopt a multidisciplinary approach by drawing on both Robotics and Human Computer Interaction (HCI) techniques and methodologies. | Field robotics is the use of sturdy robots in unstructured environments. One important example of such a scenario is in Search And Rescue (SAR) operations to seek out victims of catastrophic events in urban environments. While advances in this domain have the potential to save human lives, many challenging problems still hinder the deployment of SAR robots in real situations. This project tackles one such crucial issue: effective real time mapping. To address this problem, we adopt a multidisciplinary approach by drawing on both Robotics and Human Computer Interaction (HCI) techniques and methodologies. | ||

| Line 12: | Line 21: | ||

{| | {| | ||

| [[Image:Screen Shot 2011-12-01 at 11.23.42 PM (small).jpg| | | [[Image:Screen Shot 2011-12-01 at 11.23.42 PM (small).jpg|350px|thumb|3D reconstruction of lab from RGB-D camera data]] | ||

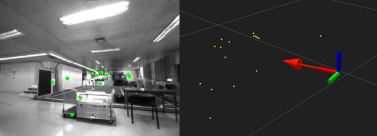

| [[Image:Slam6d.png|377px|thumb|Monocular 6D-SLAM in lab]] | | [[Image:Slam6d.png|377px|thumb|Monocular 6D-SLAM in lab. Left: detected features in one of the cameras, right: robot pose estimation]] | ||

|- | |||

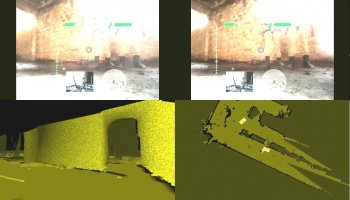

| [[Image:AR-GIPS-1.jpg|350px|thumb|Teleoperation interface. Top: augmented reality as seen from the Head-Mounted Display, bottom: 3D and 2D maps of the environment from laser scan data.]] | |||

| [[Image:RAPOSA-NG-GIPS-2.png|377px|thumb|RAPOSA-NG being deployed by first responders in a Search and Rescue exercise]] | |||

|} | |} | ||

| Line 44: | Line 56: | ||

* João O'Neill (MSc candidate, IST/ISR) | * João O'Neill (MSc candidate, IST/ISR) | ||

* José Gomes Reis (MSc, IST/ISR) | * José Gomes Reis (MSc, IST/ISR) | ||

* | |||

== Robot description == | |||

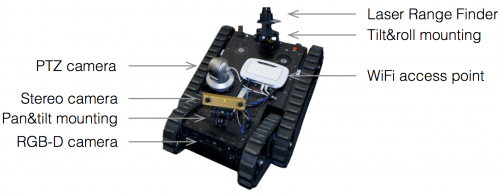

[[Image:RaposaNG description.png|500px]] | |||

Although the methodologies developed in this project apply to a broad range of robots, we have developed and implemented them on the RAPOSA-NG platform. This platform was used in various experimental scenarios, including controlled lab experiments, scientific competitions, and Search and Rescue exercises involving first responders. A complete description of this platform used in this project can be found here: [[RAPOSA-NG]]. | |||

== Media == | |||

The following Youtube playlist contains four videos showing the participation of our team in several scenarios: | |||

* field exercise in a joint operation with the GIPS/GNR Search and Rescue team (2014) | |||

* ANAFS/GREM-2013 Search and Rescue exercise, showing a joint operation with GIPS/GNR (2013) | |||

* Robot Rescue League of the international scientific event RoboCup in Eindhoven, NL (2013) | |||

* Robot Rescue League of the international scientific event RoboCup German Open in Magdeburg, DE (2012) | |||

<html><iframe width="560" height="315" src="//www.youtube.com/embed/videoseries?list=PLsqyNWcoPcL804X-3jQ-v8o583vN7pV7c" frameborder="0" allowfullscreen></iframe></html> | |||

== Publications == | == Publications == | ||

| Line 83: | Line 110: | ||

* João José Gomes Reis, Immersive Robot Teleoperation Using an Hybrid Virtual and Real Stereo Camera Attitude Control, MSc thesis, Instituto Superior Técnico, 2012. — [http://welcome.isr.ist.utl.pt/img/pdfs/2985_immersive.pdf PDF] | * João José Gomes Reis, Immersive Robot Teleoperation Using an Hybrid Virtual and Real Stereo Camera Attitude Control, MSc thesis, Instituto Superior Técnico, 2012. — [http://welcome.isr.ist.utl.pt/img/pdfs/2985_immersive.pdf PDF] | ||

== Previous work == | == Previous work == | ||

Latest revision as of 16:56, 3 February 2018

Website: http://aurero.isr.ist.utl.pt

Funding

- FRARIM: Human-robot interaction with field robots using augmented reality and interactive mapping (PTDC/EIA-CCO/113257/2009)

- LARSyS Plurianual Budget (PEst-OE/EEI/LA0009/2011)

Executive Summary

Field robotics is the use of sturdy robots in unstructured environments. One important example of such a scenario is in Search And Rescue (SAR) operations to seek out victims of catastrophic events in urban environments. While advances in this domain have the potential to save human lives, many challenging problems still hinder the deployment of SAR robots in real situations. This project tackles one such crucial issue: effective real time mapping. To address this problem, we adopt a multidisciplinary approach by drawing on both Robotics and Human Computer Interaction (HCI) techniques and methodologies.

To achieve effective human-robot interaction (HRI), we propose presenting a pair of stereo camera feeds from a robot to an operator through an Augmented Reality (AR) head-mounted display (HMD). This will provide an immersive experience to the controller and has the capacity to display rich contextual information. To further enhance this display, we will superimpose mapping data generated by SLAM-6D (Simultaneous Localization And Mapping) algorithms over the video input using Augmented Reality (AR) techniques. Three modes of display will be available: (1) a top view 2D map, to aid navigation and provide an overview of spaces, (2) a rendered 3D model to allow the operator to explore a virtual model of the environment in detail, and (3) a superimposed rendered 3D model, synchronized with the field of view of the operator to form an augmented camera view. To achieve this latter mode we will track the angular position of the HMD and use it to both control the orientation of the robot's cameras and the rendering of the AR scene.

We will use real firefighter training camps as an experimental setup, leveraging a prior relationship between IST/ISR and the Lisbon firefighter corporation. The cooperation with firefighter teams will also provide subjects for initial task analyses and later usability evaluations of the system in the field. Concerning the robotic platforms, we will use existing robotic platforms at IST/ISR (e.g., the RAPOSA robot, together with other commercial platforms), upgrading them with the necessary equipment to achieve the project goals: 3D ranging sensors, calibrated stereo camera pairs with a pan&tilt mounting, and increased computing power.

Partners

- Institute for Systems and Robotics (IST/ISR), Instituto Superior Técnico (Lisbon, Portugal)

ISR-Lisbon is a university based R&D institution where multidisciplinary advanced research activities are developed in the areas of Robotics and Information Processing, including Systems and Control Theory, Signal Processing, Computer Vision, Optimization, AI and Intelligent Systems, Biomedical Engineering. Applications include Autonomous Ocean Robotics, Search and Rescue, Mobile Communications, Multimedia, Satellite Formation, Robotic Aids.

- Madeira Interactive Technologies Institute (M-ITI) (Madeira, Portugal)

The work of the institute mainly concentrates on innovation in the areas of computer science, human-computer interaction, and entertainment technology. Feel welcome to explore the information here on our educational programs and our research efforts.

People

- Rodrigo Ventura (Principal Investigator, IST/ISR)

- Ian Oakley (Faculty, M-ITI)

- Alexandre Bernardino (Faculty, IST/ISR)

- José Gaspar (Faculty, IST/ISR)

- José Gouveia Pereira Corujeira (MSc, M-ITI)

- Filipe Jesus (MSc, IST/ISR)

- Pedro Vieira (MSc, IST/ISR)

- João Mendes (MSc, IST/ISR)

- João O'Neill (MSc candidate, IST/ISR)

- José Gomes Reis (MSc, IST/ISR)

Robot description

Although the methodologies developed in this project apply to a broad range of robots, we have developed and implemented them on the RAPOSA-NG platform. This platform was used in various experimental scenarios, including controlled lab experiments, scientific competitions, and Search and Rescue exercises involving first responders. A complete description of this platform used in this project can be found here: RAPOSA-NG.

Media

The following Youtube playlist contains four videos showing the participation of our team in several scenarios:

- field exercise in a joint operation with the GIPS/GNR Search and Rescue team (2014)

- ANAFS/GREM-2013 Search and Rescue exercise, showing a joint operation with GIPS/GNR (2013)

- Robot Rescue League of the international scientific event RoboCup in Eindhoven, NL (2013)

- Robot Rescue League of the international scientific event RoboCup German Open in Magdeburg, DE (2012)

Publications

2014

- Henrique Martins, Ian Oakley, and Rodrigo Ventura. Design and evaluation of a head-mounted display for immersive 3-D teleoperation of field robots. Robotica, Cambridge University Press, 2014. — PDF

- Rodrigo Ventura. New Trends on Medical and Service Robots: Challenges and Solutions, volume 20 of MMS, chapter Two Faces of Human-robot Interaction: Field and Service robots, pages 177–192. Springer, 2014. — PDF

2013

- Pedro Vieira and Rodrigo Ventura. Interactive mapping using range sensor data under localization uncertainty. Journal of Automation, Mobile Robotics & Intelligent Systems, 6(1):47–53, 2013. — PDF

- Filipe Jesus and Rodrigo Ventura. Simultaneous localization and mapping for tracked wheel robots combining monocular and stereo vision. Journal of Automation, Mobile Robotics & Intelligent Systems, 6(1):21–27, 2013. — PDF

- José G. P. Corujeira and Ian Oakley. 2013. Stereoscopic egocentric distance perception: the impact of eye height and display devices. In Proceedings of the ACM Symposium on Applied Perception (SAP '13). ACM, New York, NY, USA, 23-30. DOI=10.1145/2492494.2492509 — PDF

2012

- Rodrigo Ventura and Pedro U. Lima. Search and rescue robots: The civil protection teams of the future. In Proceedings of International Conference on Emerging Security Technologies (EST), pages 12–19, 2012. — PDF

- Pedro Vieira and Rodrigo Ventura. Interactive mapping in 3D using RGB-D data. In IEEE International Symposium on Safety Security and Rescue Robotics (SSRR’12), College Station, TX, 2012. — PDF

- Filipe Jesus and Rodrigo Ventura. Combining monocular and stereo vision in 6D-SLAM for the localization of a tracked wheel robot. In IEEE International Symposium on Safety Security and Rescue Robotics (SSRR’12), College Station, TX, 2012. — PDF

- Pedro Vieira and Rodrigo Ventura. Interactive mapping using range sensor data under localization uncertainty. In International Workshop on Perception for Mobile Robots Autonomy (PEMRA’12), Poznan, Poland, 2012. — PDF

- Filipe Jesus and Rodrigo Ventura. Simultaneous localization and mapping for tracked wheel robots combining monocular and stereo vision. In International Workshop on Perception for Mobile Robots Autonomy (PEMRA’12), Poznan, Poland, 2012. — PDF

- Pedro Vieira and Rodrigo Ventura. Interactive 3D scan-matching using RGB-D data. In IEEE International Conference on Emerging Technologies and Factory Automation (ETFA’12), Kraków, Poland, 2012. — PDF

- João José Reis and Rodrigo Ventura. Immersive robot teleoperation using an hybrid virtual and real stereo camera attitude control. In Proceedings of Portuguese Conference on Pattern Recognition (RecPad), Coimbra, Portugal, 2012. — PDF

- Filipe Miguel Oliveira de Jesus, Simultaneous Localization and Mapping using Vision for Search and Rescue Robots, MSc thesis, Instituto Superior Técnico, 2012. — PDF

- Pedro Gonçalo Soares Vieira, Interactive Mapping Using Range Sensor Data under Localization Uncertainty, MSc thesis, Instituto Superior Técnico, 2012. — PDF

- João José Gomes Reis, Immersive Robot Teleoperation Using an Hybrid Virtual and Real Stereo Camera Attitude Control, MSc thesis, Instituto Superior Técnico, 2012. — PDF

Previous work

Projects

- SocRob-RESCUE (RoboCup RRL team)

- RAPOSA robot

- Rescue project

Publications

- Immersive 3-D Teleoperation of a Search and Rescue Robot Using a Head-Mounted Display, Henrique Martins, Rodrigo Ventura, IEEE International Conference on Emerging Techonologies and Factory Automation (ETFA-09), Mallorca, Spain, 2009. — PDF

- Robust Autonomous Stair Climbing by a Tracked Robot Using Accelerometer Sensors, Jorge Ferraz, Rodrigo Ventura, International Conference on Climbing and Walking Robots (CLAWAR-09), Istanbul, Turkey, 2009. — PDF

- Autonomous docking of a tracked wheels robot to its tether cable using a vision-based algorithm, Fausto Ferreira, Rodrigo Ventura, Workshop on Robotics for Disaster Response, ICRA 2009 - IEEE International Conference on Robotics and Automation, Kobe, Japan, 2009. — PDF

- A Search and Rescue Robot with Tele-Operated Tether Docking System, C. Marques, J. Cristovão, P. Alvito, Pedro Lima, João Frazão, M. Isabel Ribeiro, Rodrigo Ventura, Industrial Robot, Emerald Group Publishing Limited, Vol. 34, No.4, pp. 332-338, 2007 , 2007. &mdash PDF

- RAPOSA: Semi-Autonomous Robot for Rescue Operations , C. Marques, J. Cristovão, Pedro Lima, João Frazão, M. Isabel Ribeiro, Rodrigo Ventura, Proc. of IROS2006 - IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 2006. — PDF